When Gothic cathedrals rose in the Middle Ages, their soaring vaults seemed to transcend the constraints of stone. To modern eyes, they are frozen miracles—gravity-defying arcs, weight balanced by buttresses, light refracted through geometry. But to the masons who built them, they were also experiments in structural possibility. Each innovation—pointed arch, ribbed vault, flying buttress—first imitated familiar practice and then leapt beyond it.

Engineering today finds itself at a similar threshold. The tools we wield—AI-driven design engines, physics-based simulators, automated workflows—are cathedrals of software, scaffolding human intuition while hinting at futures we barely comprehend.

Matt, a product leader and programmer at Quilter, puts the tension bluntly:

“Right now we’re focused on parity with what a reasonably skilled electrical engineer would do in weeks. But the next step, of course, is superhuman outcomes—discovering solutions that were never even contemplated because they’re totally outside of the box.”

Parity—the ability of AI to replicate human-level performance—is a milestone. But if treated as an end point, it risks becoming a glass ceiling. The true frontier is superhuman: solutions outside the imagination of today’s engineers, layouts no one would sketch, optimizations across dimensions too vast for human trade-offs.

This essay traces that frontier. From the historical arc of engineering tools to the lived frustrations of practitioners, from parity’s usefulness to the disruptive promise of “superhuman” outcomes, it asks: what happens when the cathedral begins to design itself?

The Long Arc of Engineering Methods

Engineering has always been the story of extending human ability through tools. The compass and straightedge extended the draftsman’s hand. The slide rule compressed logarithmic reasoning into mechanical form. SPICE simulators compressed weeks of circuit calculations into hours of numerical modeling. Each advance followed a familiar pattern: first, mimic human intuition; then, expand beyond it.

The computer-aided design revolution of the 1980s was a case in point. Early CAD programs didn’t imagine alien geometries; they digitized drafting tables. Only later did they open up avenues like finite element analysis and integrated verification that stretched beyond what pen and vellum could achieve.

Robert Krone, who studied algebraic geometry before turning to software for automotive and electronics, embodies this arc. He recalls his academic life:

“My PhD in pure math wasn’t meant to lead to industry—but algorithms and geometry kept pulling me closer to practical problems. At Divergent3D, we created software to automate the design of auto parts. Optimization and 3D printing produced organic shapes no human engineer would sketch.”

In Krone’s story, the pivot from abstraction to application mirrors the discipline’s pivot from parity to discovery. Academic code was often a sketch, “proof of concept” sufficient to demonstrate a theorem. Production code, he notes, “had to last.” That permanence—code hardened for industry—reflects engineering’s cultural demand: reliability before novelty.

But as optimization and simulation expand, the arc shifts. No longer are tools merely compressing known calculations. They are searching across design spaces so vast, so multi-dimensional, that even expert intuition falters. The engineer’s role is less to calculate every possibility than to decide which alien possibility is worth manufacturing.

Parity is where this story pauses. Superhuman outcomes are where it accelerates.

Parity as Threshold, Not Destination

To achieve parity is to cross a threshold of trust. Engineers must first believe a tool can replicate their expertise before they will cede decisions to it. For startups, this threshold is existential: until a product demonstrates parity, enterprise buyers will not adopt.

Matt frames this in engineering terms: Quilter’s goal is to “match what a skilled engineer would do in weeks.”

Parity delivers credibility. It signals that an AI-driven tool is not science fiction but a viable replacement for traditional workflows. It gives engineers confidence that the machine’s suggestion is not wildly off-base. It gives investors confidence that markets exist.

But parity also has dangers. If treated as the end state, it limits imagination. Engineers risk using AI merely as a faster drafting table, not as a generator of alien but viable futures. Investors risk backing products that plateau once parity is achieved. And employees risk joining companies where innovation stops at replication.

Stephen Ambrose underscores the cultural cost of stopping at parity. “Enterprise buyers assume a startup is weak,” he says. “Your job is to prove competence and inspire confidence from day one.”

Proving competence requires parity. Inspiring confidence requires hinting at more.

Parity, then, is best understood as scaffolding: necessary for construction, but not the cathedral itself. To mistake the scaffold for the structure is to stop short of what engineering tools have always promised—the leap from mimicry to transcendence.

Toward Superhuman Outcomes

What, then, does “superhuman” mean in practice? It does not mean fantasy. It means solutions that are valid, manufacturable, and validated by physics—yet alien to human instinct.

Matt describes this aspiration:

“The next step, of course, is superhuman outcomes—discovering solutions that were never even contemplated.”

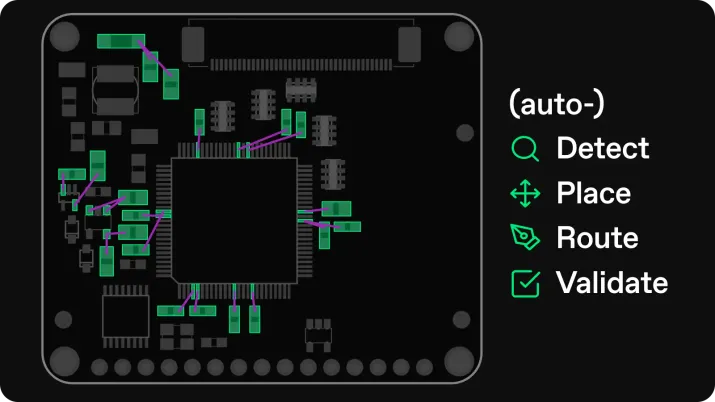

Consider PCB design. A human engineer balances power integrity, EMI, thermal dissipation, and manufacturability through experience and heuristics. An AI engine, unconstrained by those heuristics, can explore trade-offs across thousands of variables simultaneously. It may propose a trace layout that looks nonsensical to the eye but proves optimal under simulation.

This is already happening in adjacent fields. Google DeepMind’s 2021 work on chip floorplanning used reinforcement learning to generate designs rivaling human experts in weeks rather than months. Airbus has used generative design to create lattice structures in aircraft interiors that cut weight while maintaining strength. In both cases, the AI’s proposals looked “wrong” until physics proved them right.

Krone experienced this directly in auto parts: “Optimization and 3D printing produced organic shapes no human engineer would sketch.”

The credibility of superhuman outcomes hinges on validation. As Matt stresses, Quilter doesn’t simply route traces; it validates them against physics: “What’s the heat rise? Is this sufficient to carry the current?”

Without validation, superhuman outcomes are curiosities. With validation, they are revolutionary.

For engineers, superhuman design challenges pride and habit. For investors, it signals defensibility: parity can be copied; superhuman discovery scales with data and algorithms. For aspiring hires, it represents meaning: working not on faster drafting tables but on alien discoveries that redefine their profession.

Reliability in Hardware Design

Physics, Geometry, and Standards

Circuit reliability begins in the physics: parasitics, signal integrity, thermal stresses. Design errors like sharp right-angle bends, insufficient copper width, or poorly designed ground planes often don't show up in first sketches. They emerge under load.

Direct Lessons from the Interviews

Matt put it plainly: “Every system tells you how it wants to break.” In his work, he looks early for those hidden patterns — return current paths, layer stack-ups that create uneven heat, or functions whose layout will force an awkward crossing. He said that in a recent design “the stitching was off … it technically worked, but you could see the loop inductance was going to bite us.” By rethinking the ground fills and component positioning, his team avoided failures downstream.

Robert emphasizes clarity in specs. “If you aren’t clear about what you’re solving, your solution won’t be reliable either,” he said. He recounted a project where via count minimization, without defining maximum trace delay, produced boards that passed simulations but failed in field tests due to signal reflections. “Clarity fixes half your failures,” Robert observed.

Stephen brings pragmatism into this geometry. “The perfect solution that never ships is useless,” he said. He prefers modest, well-defined fixes over chasing perfect ideal designs that delay deployment. In one case, rather than redoing the entire stack-up, they increased trace spacing and added guard traces—small changes that significantly improved manufacturability and reliability.

Standards & Reliability in Practice

Putting design standards into practice is hard. Tools can help, but misinterpretation or vague requirements often undermine reliability. Common pitfalls include:

- Ignoring differential pair matching or crossing split ground planes, which leads to signal integrity issues.

- Over-looking via plating tolerances and copper weights, important under IPC-6012 or IPC-A-600 standards.

- Underestimating mechanical stresses in flex PCBs. For example, IPC-2223 (for flex and rigid-flex PCBs) includes strain reliefs and manages adhesive coverlays because physical flexing introduces failure modes that rigid PCBs do not.

These issues demand both technical attention and production feedback loops. Matt, Robert, and Stephen all pointed out that even the best simulations fail if standard tolerances provided by fabs are violated, or if components are placed too close to board edges. Prioritize manufacturability from early design.

Reliability in Team Structure

The parallels between hardware reliability and team resilience are not metaphorical—they are structural. Boards that succeed do so because constraints are clear, failure modes anticipated, and margins built in. Teams that succeed do so because roles are unambiguous, responsibilities stated, workflows defensible under pressure, and failures used as learning.

Norms, Clarity, Psychological Safety

Robert said, “Unclear requirements are just delayed bugs.” That is as much about team norms as about circuit specs. When teams have ambiguous goals or shifting priorities, the result is churn, rework, friction. Research in software engineering shows that norm clarity and psychological safety strongly correlate with team performance and job satisfaction. A 2018 study of development teams found that both psychological safety and clarity of norms positively predicted self-assessed performance and lower turnover. Norm clarity actually had even stronger predictive power.

At Quilter, these engineers described early alignment meetings where constraints are spelled out: what margins are needed, which failure modes are non-negotiable, what timing of deliverables tolerances are acceptable. This acts like defining trace width or copper pour rules in the PCB spec.

Incremental Progress & Pragmatism

Stephen’s warning—“The perfect solution that never ships is useless”—applies directly to project planning. Waiting for ideal tools, ideal specs, or ideal agreement often delays reliability. Better to ship incrementally, test in real conditions, and iterate. Just as a board may survive if one test room fails, a team is more resilient if it has short feedback loops.

Teams that build small wins—fixing a small failure, clarifying a spec, adding a margin—accumulate reliability culture. As Matt observed: “You don’t wait for the lab to tell you. You build so the lab can’t surprise you.” That discipline builds not only reliable hardware but reliable team trust.

External Insights & Reliability Theory

It's worth looking outside Quilter for frameworks that have codified similar ideas.

- High Reliability Organizations (HROs) in domains like aviation, energy, and healthcare emphasize preoccupation with failure, sensitivity to operations, and resilience. McKinsey research suggests that HROs not only have higher technical standards but also emphasize coordination skills, communication, and well-defined norms.

- Reliability engineering in AI systems is increasingly studied: frameworks propose combining metrics like Mean Time Between Failures (MTBF), formal verification, human reliability analysis to make systems trustable under diverse conditions.

These external frameworks mirror what Quilter’s engineers already pursue: anticipating failure, defining clear constraints, and building in margins.

Architecture of Reliability — The Process Layer

How does Quilter translate these technical and cultural insights into durable process?

- Constraints early & shared

All major designs start with constraint documents that include specifications for signal integrity, thermal load, manufacturability. Engineers review these constraints together: mechanical, analog, digital, layout. - Prototype feedback loops

Early boards built with test conditions (thermal cycling, vibration, humidity) to catch real-world failure modes. When failures occur, they are traced back to geometry: copper thickness, via plating, spacing. - Code & layout reviews with domain overlap

Not just layout engineers reviewing layout, but cross-domain reviews: RF engineers, analog, digital, mechanical. Not unlike Robert’s quote: clarity arises when boundary assumptions are made explicit. - Incremental deliverables & measurement

Rather than delaying a product for “perfect specs,” Quilter ships smaller modules, observes behavior, measures failure modes, learns, then improves. Stephen’s pragmatism: better to solve what you can now with high confidence, than wait for ideal.

The Cultural Leap

The move from parity to superhuman is not only technical. It is cultural.

Engineers bristle at black-box tools. Trust is earned through transparency, visualization, and test suites. Matt acknowledges this:

“I love having an incredibly robust suite of tests, approaching almost mathematical proofs where you can say: this works. I know for sure.”

Visualization becomes cultural glue. As Matt notes, engineers can be overwhelmed by “hundreds, thousands of datapoints.”

The challenge is not only generating solutions but showing them in ways humans can trust.

Startups embody this leap. Ambrose notes: “In enterprise, red tape kills ambition. In startups, you’re free to build and execute ideas immediately.”

Superhuman outcomes depend on this freedom. Enterprises, with layers of risk management, hesitate to adopt alien solutions. Startups, with scrappiness, make the leap first.

Herbert Simon’s Sciences of the Artificial (1969) framed design as adapting environments to human goals. Andy Clark’s Natural-Born Cyborgs (2003) argued that tools are cognitive extensions. In both, tools don’t merely replicate; they redefine. AI design engines extend cognition into realms too vast for direct intuition.

The leap, then, is dual: technical (from parity to discovery) and cultural (from trust in replication to trust in exploration). Engineers must shift from craftsman to curator, from solver to validator, from creator of every detail to orchestrator of possibilities.

Who Engineers Become

If tools now propose alien but valid solutions, who are engineers?

They are no longer only craftsmen. They are curators—choosing among options the AI presents. They are ethicists—deciding which solutions align with manufacturability, cost, sustainability. They are orchestrators—balancing teams, tools, and trade-offs.

Ambrose captures the spirit: “Scrappy isn’t about cutting corners. It’s about being resourceful with what you have.”

Resourcefulness shifts from manually solving every constraint to knowing which tunnel to walk through when the AI has carved multiple through the mountain.

For engineers, this identity shift can be unsettling. Pride has long rested on personal ingenuity, the “elegant hack.” In a superhuman future, pride may rest on discernment—knowing which alien idea to trust.

For investors, the implication is cultural resilience. Startups that nurture this new engineering identity will outpace those who cling to parity. For aspiring employees, the message is opportunity: to join not just a company but a profession redefining itself.

Possibility

The history of engineering is the history of thresholds. The compass extended the draftsman’s reach. CAD digitized drafting. Simulation extended analysis. Each first mimicked, then transcended.

Parity is the latest threshold. Necessary, but not sufficient. To stop at parity is to stop at scaffolding. The cathedral is superhuman outcomes—solutions unimaginable without AI, yet grounded in physics, validated, and manufacturable.

Matt’s call is clear: the next step is discovering “solutions that were never even contemplated.”

Krone’s journey shows that abstraction becomes tangible when optimization explores beyond human sketches. Ambrose reminds us that startups, scrappy and immediate, are the crucibles where this leap begins.

For engineers: embrace discomfort. For investors: recognize that parity is not the finish line but the on-ramp. For aspiring hires: see startups like Quilter as places where not just products, but identities, are forged.

We are no longer only building circuits. We are building cathedrals of possibility—structures that design themselves, and in doing so, reshape what it means to be an engineer.